Across a whirlwind three days of Augmented World Expo USA 2025 in Long Beach, California, I tested out demos from some of the biggest names in XR, while also checking out prototypes from start-ups that could change the industry.

Well-known XR brands used AWE 2025 to announce major XR projects: Qualcomm announced an AR chip capable of on-device AI, Snap promised new, lightweight AR Specs in 2026, and XREAL shared more details on its Android XR-enabled glasses.

But I saw much more on the show floor. Here are all of the AWE 2025 highlights I saw or tested that people will find interesting!

You may like

A display that tracks your eyes without a camera

Image 1 of 3

(Image credit: Michael Hicks / Android Central)

(Image credit: Michael Hicks / Android Central) (Image credit: Michael Hicks / Android Central)

(Image credit: Michael Hicks / Android Central) (Image credit: Michael Hicks / Android Central)

(Image credit: Michael Hicks / Android Central)

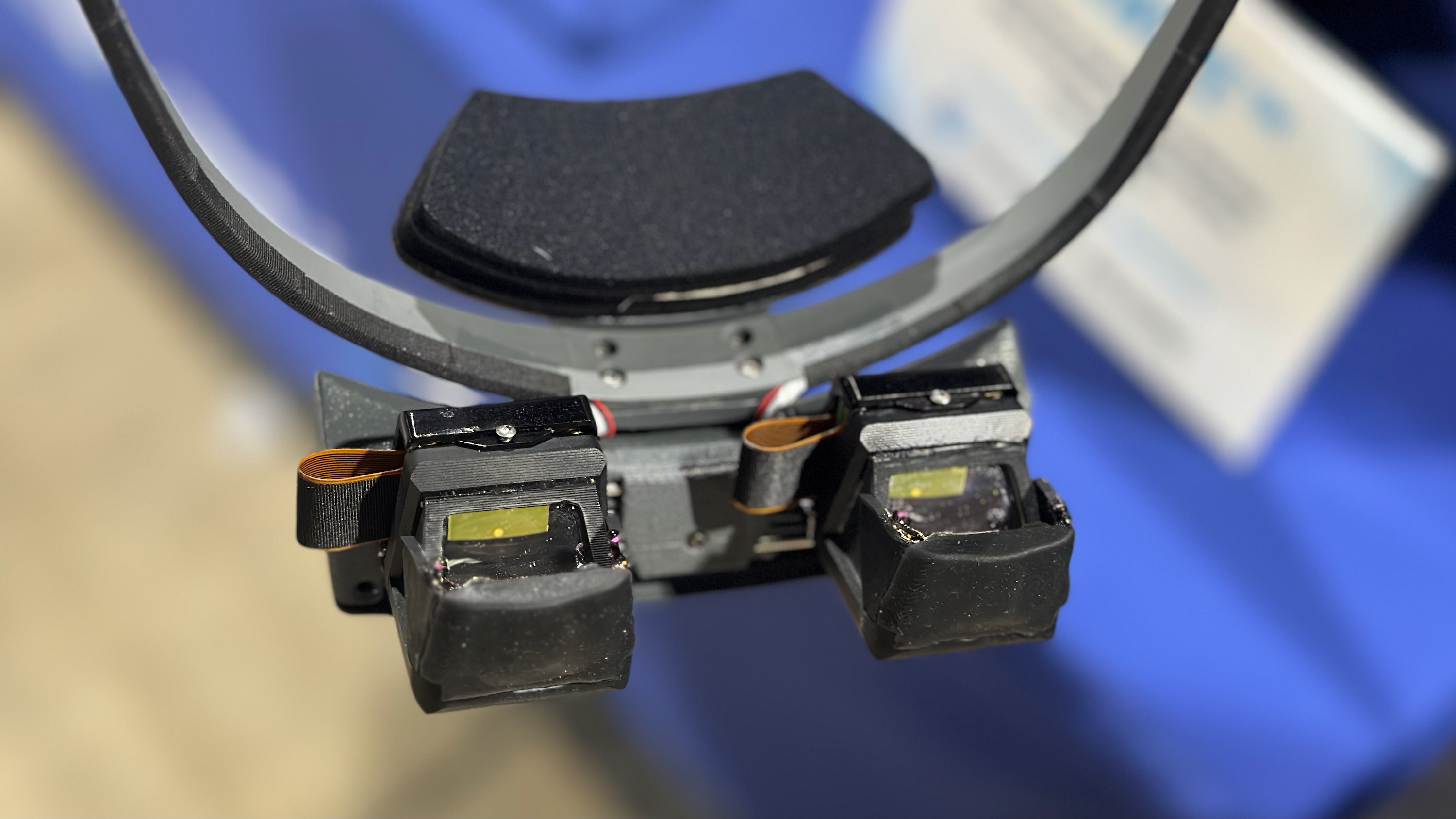

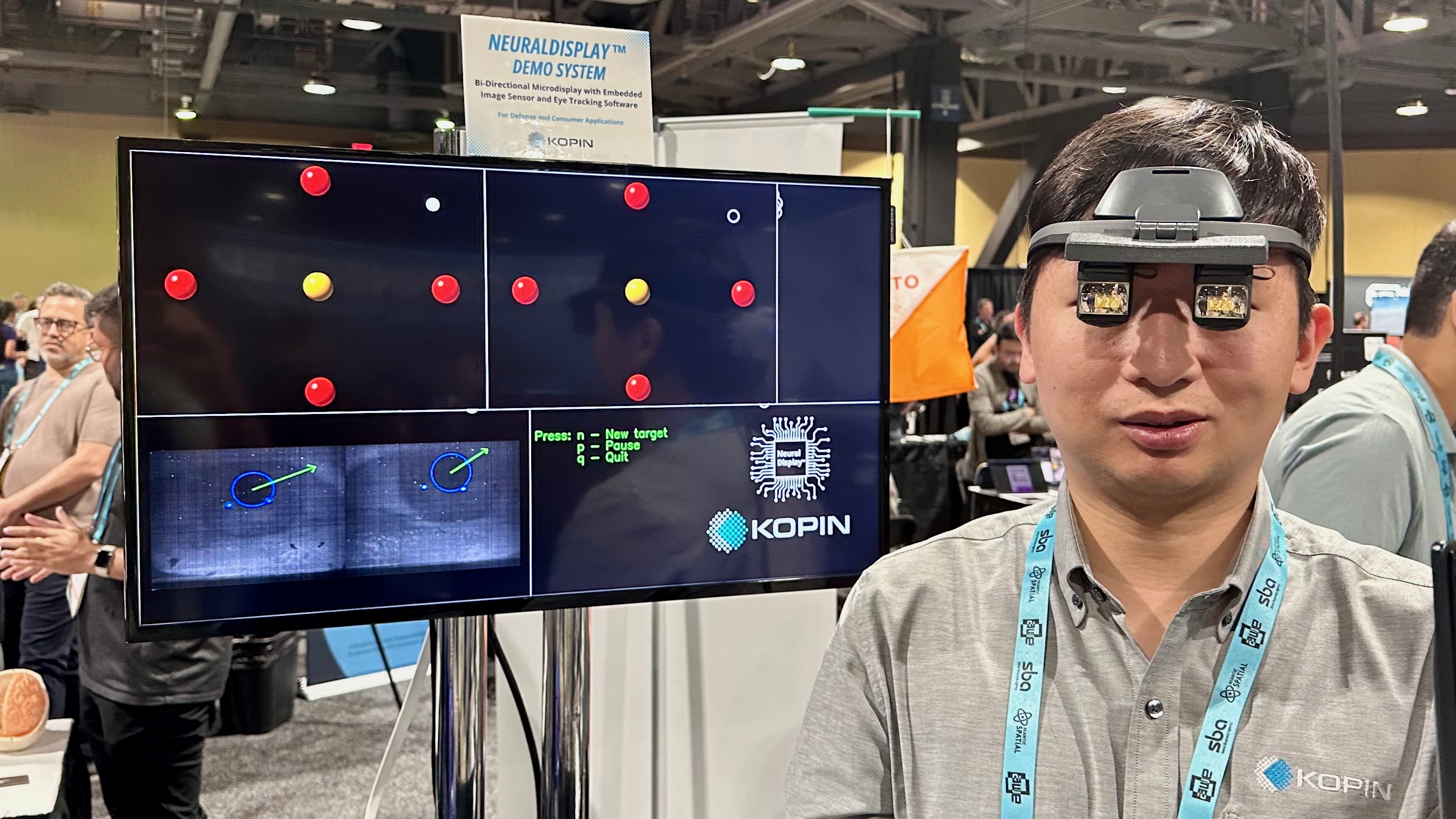

Kopin Corporation focuses on enterprise products and display components. At their booth, I wore a lightweight surgical HMD and a night-vision AR helmet for soldiers’ targeting and guidance. I also checked out their ultra-bright microLED display, the in-vogue tech for AR glasses and smartwatches.

But Kopin’s most promising tech for consumers is its NeuralDisplay. It uses “traditional RGB pixels, image-sensing pixels, and advanced signal processing algorithms” to track your eye movement and neurological response in a functional VR display.

I saw the tech in action at AWE (and you can see a video example below). The Kopin engineer shone a flashlight into his eye, and the display immediately detected his pupil dilation and adjusted the brightness and contrast to prevent any discomfort.

Kopin’s NeuralDisplay™ Eyetracking Software – YouTube

The version they showed off at AWE, built on OLED but compatible with microLED, can adjust the display’s content automatically to match the user’s gaze and simulate depth.

It can track data like the user’s blinking rate to judge if the person is fatigued or enable foveated rendering, all without needing any SoC processing power. And in the case of military applications (or FPS games), this tech could determine that the user isn’t looking in the direction of a threat and guide their gaze that way.

It’s still an in-progress prototype that needs silicon improvements to enable better color accuracy and sensitivity. But CEO Michael Murray said that they proved wrong the naysayers who “said we couldn’t pull it off,” and that companies like Meta, Apple, and Anduril will be very interested in licensing their patent so that they can shed the weight of their eye-tracking cameras. We’ll see if this happens!

Viture’s (REDACTED) XR glasses and Switch 2 gaming

(Image credit: Michael Hicks / Android Central)

I have to be vague about this entry, as Viture — maker of XR gaming glasses like the Viture Pro XR — showed me its next-generation glasses at AWE but told me I couldn’t reveal anything except to “tease” readers and “get them excited for what’s coming.”

To avoid any trouble, I’ll quote without comment this Tom’s Guide article noting that the next Viture XR glasses will “be the first to use Sony’s latest Micro-OLED panels” with a “sharper, brighter and wider field of view than the current Pros’ 46-degree FoV. It also suggested Viture will sell “multiple specs,” including one that supports 6 degrees of freedom (6DoF). Sure sounds promising, if true!

(Image credit: Michael Hicks / Android Central)

What I’ll tease is simple: Viture’s upcoming devices changed my perspective on XR glasses, and now I desperately want its new (redacted) glasses for my Nintendo Switch 2 and Steam Deck.

I played some Street Fighter on Viture’s Switch 2 using the Pro Mobile Dock, which attaches to the back to stream content to XR glasses while charging the console. And their enhanced hardware helped sell me on handheld gaming with a TV-sized screen while relaxing in bed with my handheld console — in a way last-gen XR glasses with dimmer, low-FoV displays never could.

So watch out soon for Viture’s upcoming lineup of XR glasses!

Niantic Spatial’s Visual Positioning System tour guide

Niantic describing their new VPS-enabled model at AWE (Image credit: Michael Hicks / Android Central)

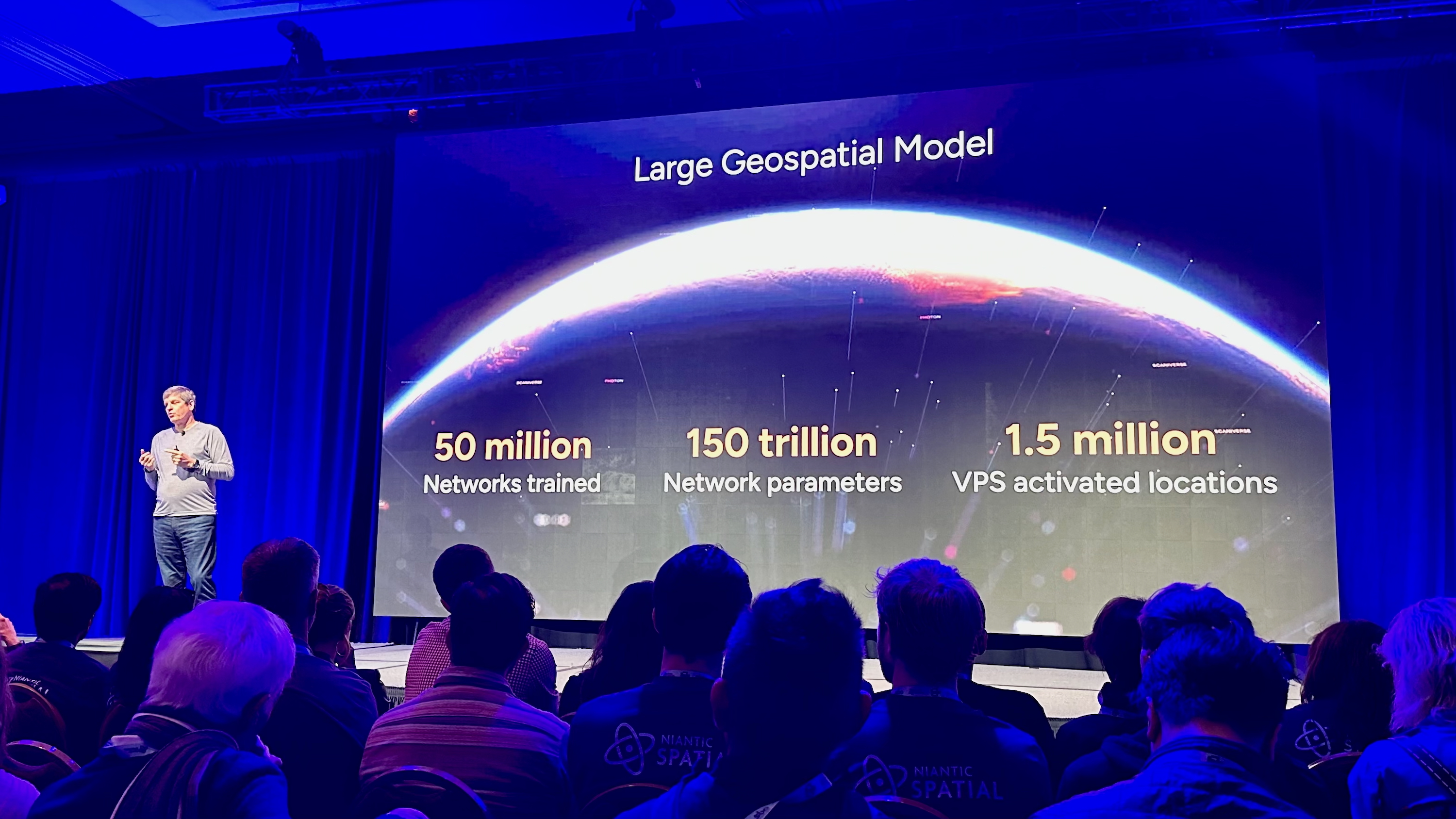

Smart glasses with multimodal AI like Ray-Ban Meta glasses have you take a photo of an object or landmark to analyze it. But the eventual goal for AR glasses is to use GPS and gaze tracking to know with precision what you’re looking at, in order to provide accurate info. And Niantic Spatial is working on that system.

The former Pokémon Go makers took me to a street next to the AWE convention center where they’d applied their Visual Positioning System (VPS) and had me wear AR Snap Spectacles.

Their little XR assistant, Dot, then served as a tour guide. If I tapped my fingers together and asked about the mosaic artwork at my feet, it would describe the art style, artist, and city initiative behind it, with options to learn more. Or if I asked about the building across the street, it would tell me about its history as a comedy club.

(Image credit: Michael Hicks / Android Central)

The idea behind Niantic’s VPS is that they’ll build an educational AR equivalent to Google Maps that’s “independent of specific hardware vendors,” so Android XR and Meta glasses owners can learn about the world with “centimeter-level” geolocation. They have 1.5 million pre-mapped locations already, and they offer “private VPS” for companies to make their own AR educational tours; I’d imagine it working well for a museum, or a theme park like Disneyland.

The demo itself was a bit finicky. Dot occasionally refused to answer my questions, blathering on about whatever it thought I was next to. The Snap Spectacles battery reached critical lows after about 15 minutes of Q&A, with the display struggling with the sunny weather; it’s hard to say when AR glasses hardware will be ready for a city tour. But when it worked, it worked very well, and I could see both kids and curious adults loving this software…once the AR hardware is ready for it.

Sony’s premium XYN headset

(Image credit: Michael Hicks / Android Central)

The Sony XYN (pronounced “zin”), like Samsung Project Moohan, has the premium Snapdragon XR2+ Gen 2 chipset with tons of RAM and micro-OLED quality. If I hadn’t already demoed Moohan last month, the insane 3552×3840 resolution would have blown my mind. But being able to get up close to a virtual book page and read every word without straining, or examine the details of a painting, is still beyond what any current VR headset can offer.

Sony also showed off subtle finger controllers that you can squeeze to select and move spatial content. Hand tracking works pretty well on most recent headsets like the Quest and Vision Pro, but having a wearable controller adds more precision so you’re not vainly struggling with missed camera inputs while trying to work.

Almost no one will end up using Sony’s premium XYN headset; it’s designed for “spatial content creation,” meaning only enterprise users willing to drop nearly five grand to create CADs will need one. But it’s still a promising sign of how immersive XR headsets are getting, and I love the flip-up display design for productivity.

Android XR smart ring controls

(Image credit: Michael Hicks / Android Central)

At the Qualcomm show booth, they had a space for KiWeara smart ring reference design for gesture controls for AR, XR, and smart glasses. And it’s surprisingly accurate and intuitive.

Connected to RayNeo X3 Pro glasses, the ring could control the display UI using simple finger movements or rotations around your finger. The IMU could differentiate between pinches away from or towards you for different functions, and the built-in PPG could display health data on the glasses.

You can also reach the ring out to tap virtual objects or use the ring touchpad to select or scroll. It even worked for accurate gaming controls when playing Fruit Ninja.

Given that Qualcomm announced that Android XR will support smart ring controls, I wonder if the next Samsung Galaxy Ring will be more specifically designed for XR controls to pair with Moohan and its smart glasses. I was never sold on the idea of a smart ring controller, but the KiWear ring’s accuracy changed my mind.

All the other AWE 2025 highlights

(Image credit: Michael Hicks / Android Central)

bHaptics’ TactSuit Pro and TactGloves add haptics support for over 100 Meta Quest games, including many of our favorite VR titles. I’ve seen it at XR events for years but finally tried it at AWE 2025, immersing myself in a space demo where I repaired my ship and pet my purring cat.

Aside from the TactSuit making me a bit ticklish, I walked away impressed by the experience and how it helped me sink into the experience. My outfit would cost more than my Quest 3, and I’m not sure I want to feel discomfort during shooters, but I definitely think VR power users would get a kick out of it.

(Image credit: Michael Hicks / Android Central)

But if we’re talking immersion, I have to mention Anywhere Bungee VR, which won AWE’s Best in Show Playground award. You climb onto a contraption and literally tip over to simulate the feeling of bungee jumping off of a Tokyo Skyscraper, with wind machines to help simulate the real terror of falling.

I think this kind of contraption would be perfect as sideshows for VR exhibition centers like Sandbox VR, and I’d be curious to see what other extreme sports like skydiving or base jumping could be recreated in a setup like this.

(Image credit: Michael Hicks / Android Central)

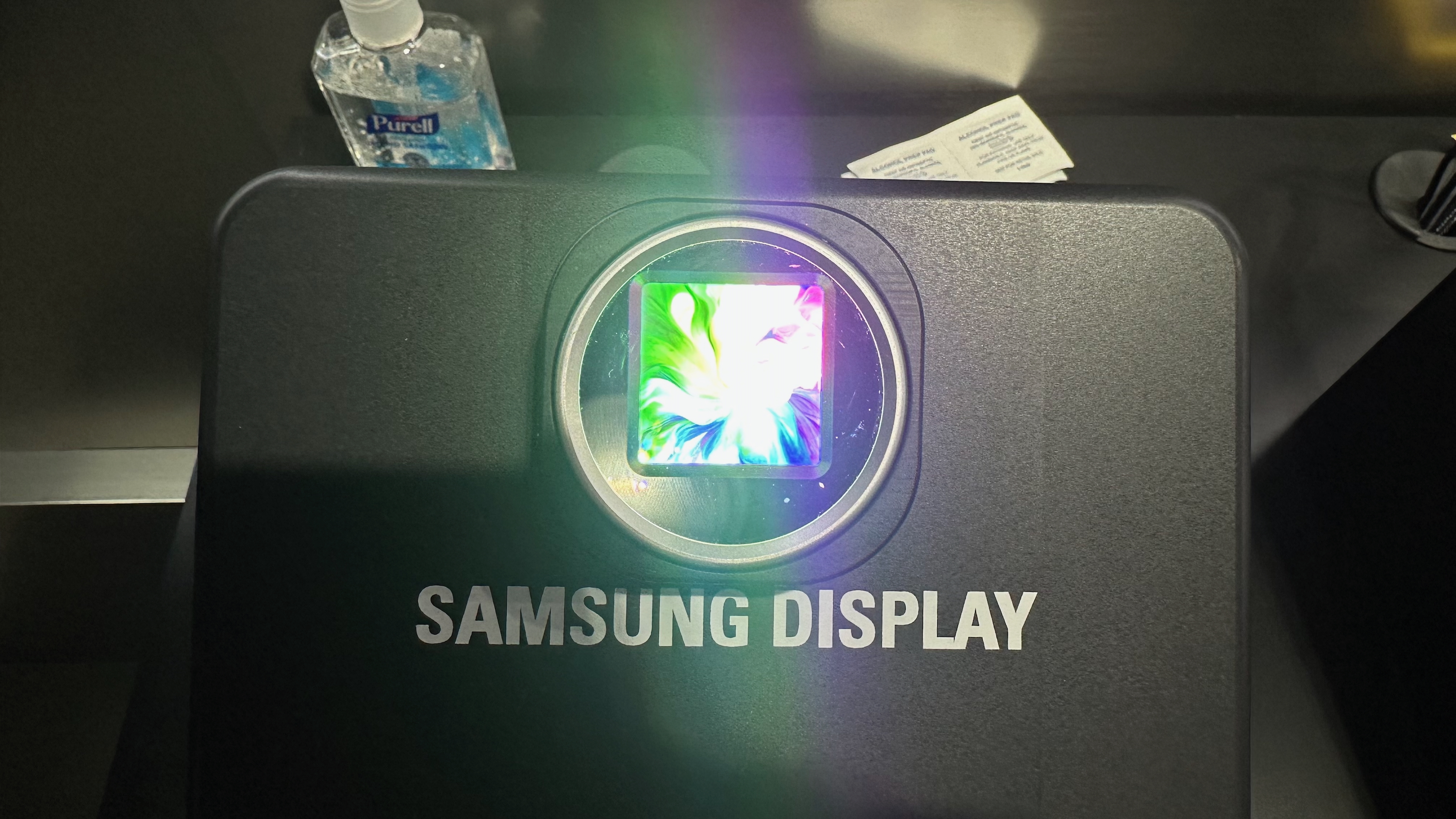

Samsung showed off its new OLED on Silicon (OLEDoS) displays at AWE, including a 1.4-inch RGB panel that hits 5,000 pixels per inch and a brighter 1.3-inch, 4,200 PPI panel that hits a whopping 20,000 nits. For context, the Apple Vision Pro hits about 3,400 PPI, while Moohan allegedly hits 3,800 PPI.

My phone camera couldn’t possibly capture the visual quality, but I could lean up against the display and see levels of color, detail, and brightness that blow most current VR headset displays out of the water. So it’s exciting to see Samsung Display challenge Sony in an XR display arms race.

GIPHY App Key not set. Please check settings