Introducing Sora and video playground in Azure AI Foundry

The video playground in Azure AI Foundry is your high-fidelity testbed for prototyping with cutting-edge video generation models – like Sora from Azure AI Foundry Models – ready for commercial use. Read our Tech Community launch blog on gpt-image-1 and Sora.

Modern development involves working across multiple systems—APIs, services, SDKs, and data models—often before you’re ready to fully commit to a framework, write tests, or spin up infrastructure. As the complexity of software ecosystems increases, the need for safe, lightweight environments to validate ideas becomes critical. Video playground was built to meet this need.

Purpose-built for developers, video playground offers a controlled environment to experiment with prompt structures, evaluate model consistency relative to prompt adherence, and optimize outputs for industry use cases. Whether you’re building AI-native video products, tools, or transforming your enterprise workflows, video playground enhances your planning and experimentation — so you can iterate faster, de-risk your workflows, and ship with confidence.

Rapidly prototype from prompt to playback to code

Video playground offers an on-demand, low-friction-setup environment designed for rapid prototyping, API exploration and technical validation with video generation models. Think of video playground as your high-fidelity prototyping environment – built to help you build better, faster and smarter – with no configuration of localhost, importing clashing dependencies or worrying about compatibility between build and model.

Sora from Azure OpenAI is the first release for video playground – with the model coming with its own API – a unique offering available for Azure AI Foundry users. Using the API in VS Code allows for scaled development in your VS Code environment for your use case once your initial experimentation is done in the video playground.

Iterate faster: Experiment with text prompts and adjust generation controls like aspect ratio, resolution and duration.

Prompt optimization: Debug, tune and re-write prompt syntax with AI, visually compare outcomes across variations you’re testing with, use prebuilt industry prompts, and build your own prompt variations available in the playground, grounded in model behavior.

Consistent interface for API: Everything in video playground mirrors the model API structure, so what works here translates directly into code, with predictability and repeatability.

Features

We built video playground for developers who want to experiment with video generation. Video playground is a full featured controlled environment for high-fidelity experiments designed for model-specific APIs – and a great demo interface for your Chief Product Officer and Engineering VP.

Model-specific generation controls: Adjust key controls (e.g. aspect ratio, duration, resolution) to deeply understand specific model responsiveness and constraints.

Pre-built prompts: Get inspired on how you can use video generation models like Sora for your use case. In the pre-built prompts tab, there is a set of 9 curated videos by Microsoft.

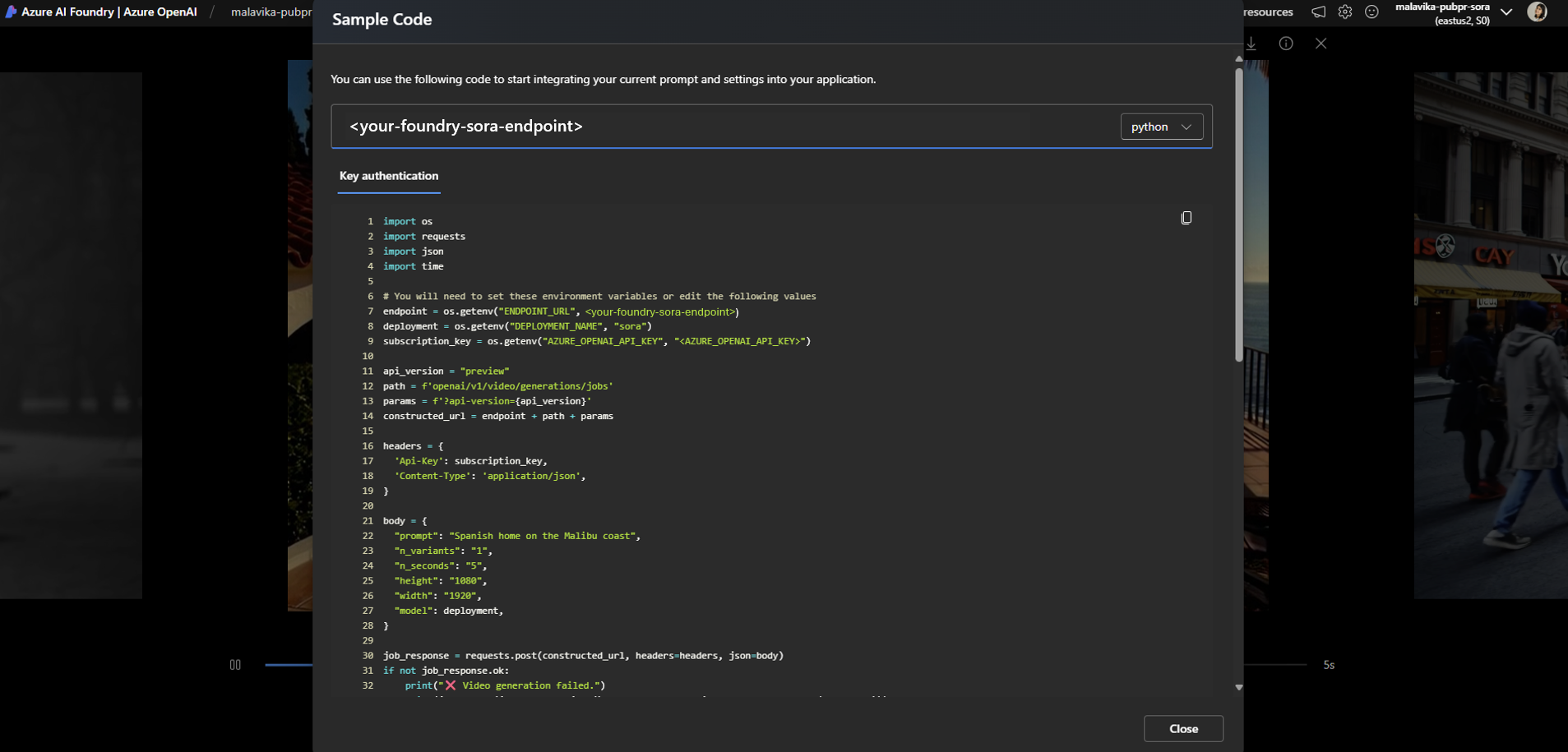

Port to production with multi-lingual code samples: In the case of Sora from Azure OpenAI – this reflects the Sora API – a unique offering available to Azure AI Foundry users. Using the “View Code” multi-lingual code samples (Python, JavaScript, GO, cURL) for your video output, prompts and generation controls that reflect the API structure. What you create in the video playground can be easily ported into VS Code so that you can continue scaled development in VS Code with the API.

Side-by-side observations in grid view: Visually observe outputs across prompt tweaks or parameter changes.

Azure AI Content Safety integration: With all model endpoints integrated with Azure AI Content Safety, harmful and unsafe videos are filtered.

See a demo of these features and Sora in video playground in our dedicated breakout session at Microsoft Build 2025 here.

No need to find, build or configure a custom UI to localhost for video generation, hope that it will automatically work for the next state-of-the-art model, or spend time resolving cascading build errors due to packages or code changes required for new models. The video playground in Azure AI Foundry gives you version-aware access. Build with the latest models with API updates surfaced in a consistent UI.

What to test for in video playground

When using video playground, as you plan your production workload, consider the following as you’re visually assessing your generations:

Prompt-to-Motion Translation

Does the video model interpret my prompt in a way that makes logical and temporal sense?

Is motion coherent with the described action or scene? How could I use Re-write with AI to improve my prompt?

Frame Consistency

Do characters, objects, and styles remain consistent across frames?

Are there visual artifacts, jitter, or unnatural transitions?

Scene Control

How well can I control scene composition, subject behavior, or camera angles?

Can I guide scene transitions or background environments?

Length and Timing

How do different prompt structures affect video length and pacing?

Does the video feel too fast, too slow, or too short?

Multimodal Input Integration

What happens when I provide a reference image, pose data, or audio input?

Can I generate video with lip-sync to a given voiceover?

Post-Processing Needs

What level of raw fidelity can I expect before I need editing tools?

Do I need to upscale, stabilize, or retouch the video before using it in production?

Latency & Performance

How long does it take to generate video for different prompt types or resolutions?

What’s the cost-performance tradeoff of generating 5s vs. 15s clips?

Run Sora and other models at scale using Azure AI Foundry—no infrastructure needed. Learn more in our recent Microsoft Mechanics video that shares more about the Sora API in action:

Get started now

Sign-in or sign-up to Azure AI Foundry.

Create a Foundry Hub and/or Project.

Create a model deployment for Azure OpenAI Sora from the Foundry Model Catalog or directly from video playground.

Prototype in video playground; iterate over text prompts and optimize generation controls for your use case.

Prototype done? Switch to scaled development in VS Code with the Sora from Azure OpenAI API.

Create with Azure AI Foundry

Read our Tech Community blog on gpt-image-1 and Sora.

Get started with , and jump directly into

Download the

Take the

Review the

Keep the conversation going in and

Source link

GIPHY App Key not set. Please check settings