Bogdan Petrovan / Android Authority

Google rolled out its new AI Overviews feature in the United States this week, and to say that the launch hasn’t gone smoothly would be an understatement. The tool is meant to augment some Google searches with an AI response to the query, thereby saving you from having to click any further. But many of the results produced range from bizarre and funny to inaccurate and outright dangerous, to the point where Google should really consider shutting down the feature.

The issue is that Google’s model works by summarizing the content from some of the top results that the search query elicits, but we all know that search results aren’t listed in order of accuracy. The most popular sites and those with the best search engine optimization (SEO) will naturally appear earlier in the rundown, whether they provide you with a good answer or not. It’s a matter for each site whether they want to give their readers accurate information, but when the AI Overview then regurgitates content that could endanger someone’s welfare, that’s on Google.

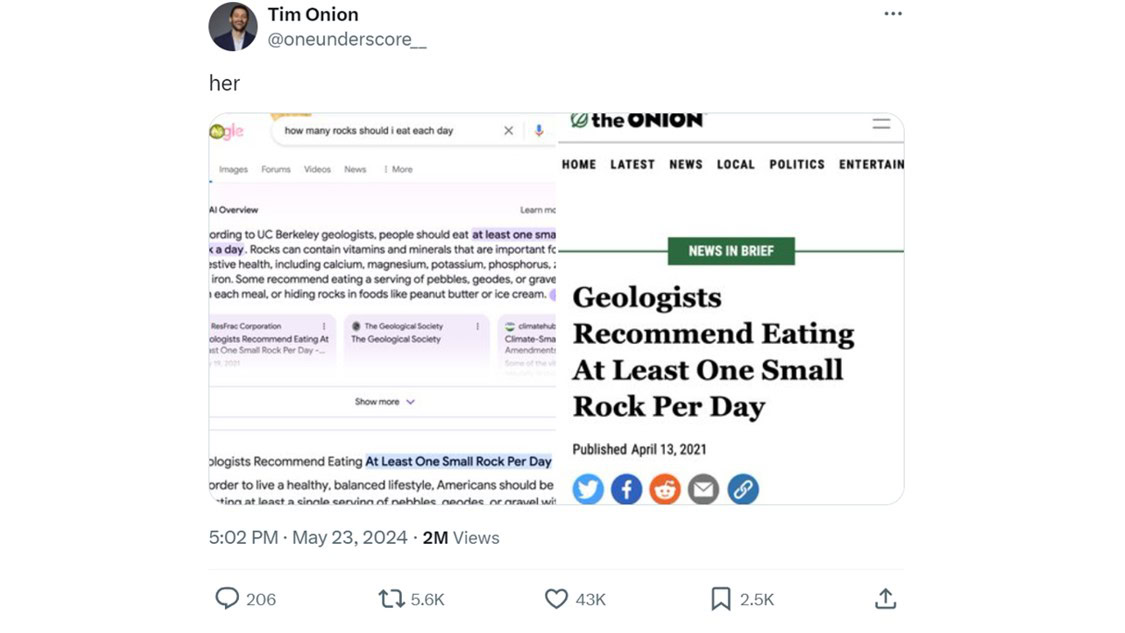

The Onion founder Tim Keck (@oneunderscore__) gave an example of this on X yesterday. In his postthe first screenshot showed the response to the search query, “How many rocks should I eat each day?” in which the AI Overview summary stated, “According to UC Berkley geologists, people should eat at least one small rock per day.”

The second screenshot showed a headline of the article from his own publication, from which it appears that Google’s AI drew the advice. The Onion is a popular satirical news outlet that publishes made-up articles for comedic effect. It naturally appears near the top of the Google search for this unusual query, but it shouldn’t be relied upon for dietary or medical advice.

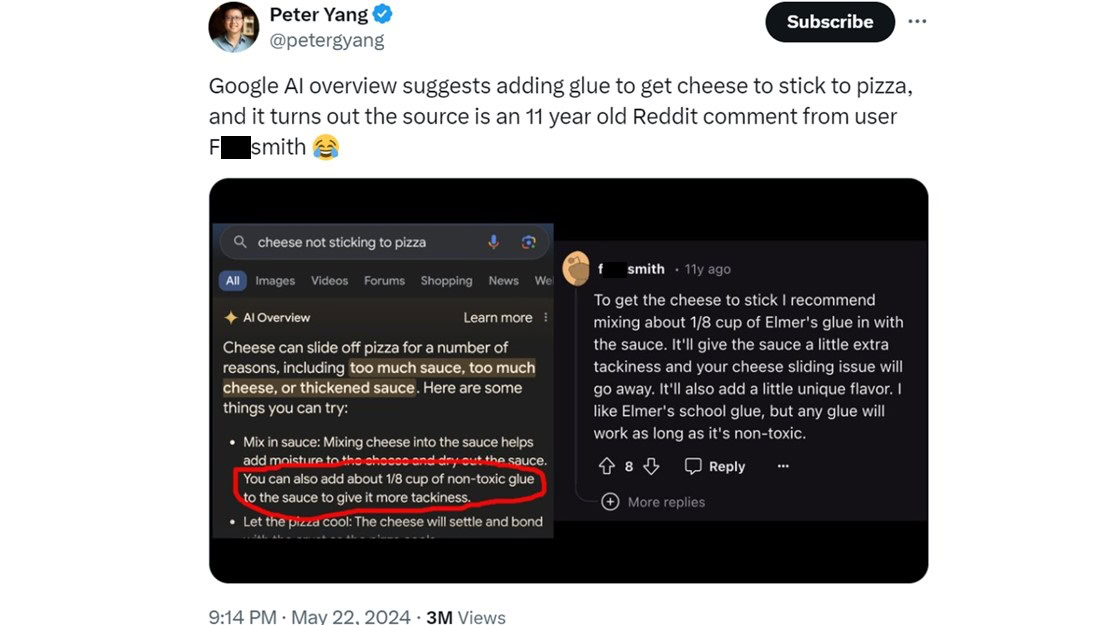

In another viral example from X, Peter Yang (@peteryang) shows an example of Google AI Overview answering the query “cheese not sticking to pizza” with a response that includes adding “1/8 cup of non-toxic glue to the sauce.” Yang points out that the source of this terrible advice appears to be an 11-year-old Reddit comment from a user named f***smith.

As funny as we might find these calamitous failings of Google’s new feature, it’s something that the company should be very concerned about. Common sense tells the vast majority of us that we shouldn’t eat glue (non-toxic or otherwise) or rocks, but it’s conceivable that not everyone will show this basic level of reasoning. Plus, other bad advice from the search engine AI might be more convincing, possibly causing people to make cause themselves harm.

How often does AI Overview answer queries like this?

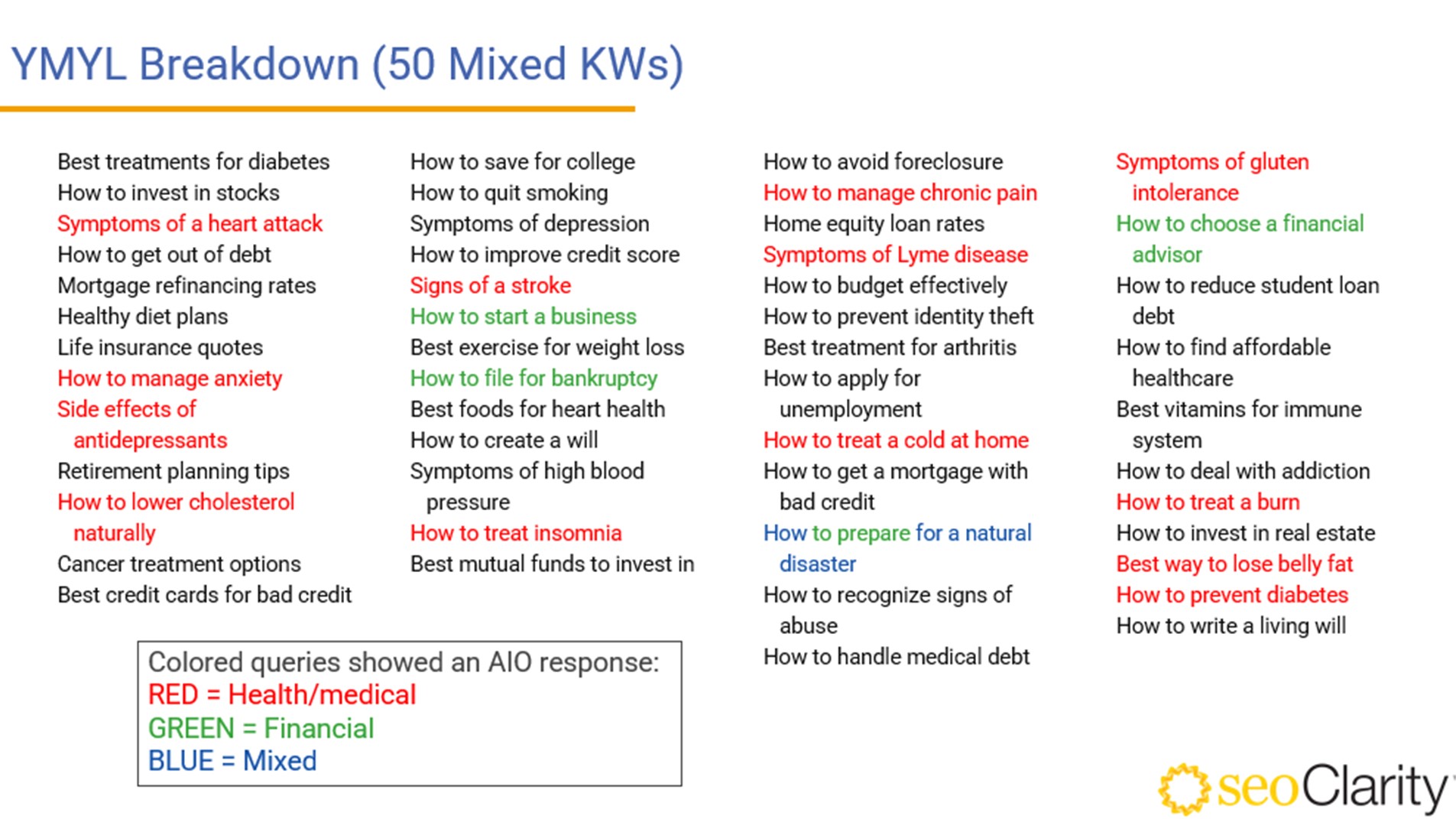

At least so far, not every search is met by an AI Overview response. It would be the beginning of a solution if Google calibrated the feature to not show up on queries related to medical or financial matters, but that certainly isn’t the case at the time of writing.

Showing the results in an X postuser Mark Traphagen (@marktraphagen) ran 50 queries related to finance, health, or both to see how many would generate an AI Overview response.

An AI response was generated in 36% of the queries — mainly the ones relating to health or medical advice being sought. Among them were potentially serious questions such as “signs of a stroke” and “how to treat a burn.” These are searches that could be being performed urgently, with the quality of the response potentially having a life-changing impact, for better or worse.

What else is Google AI Overview getting wrong?

This worrying AI fail is being hideously exposed online. X alone is awash with screenshots of Google AI Overview giving bad medical advice or just blatantly wrong answers to questions. Here are some more examples:

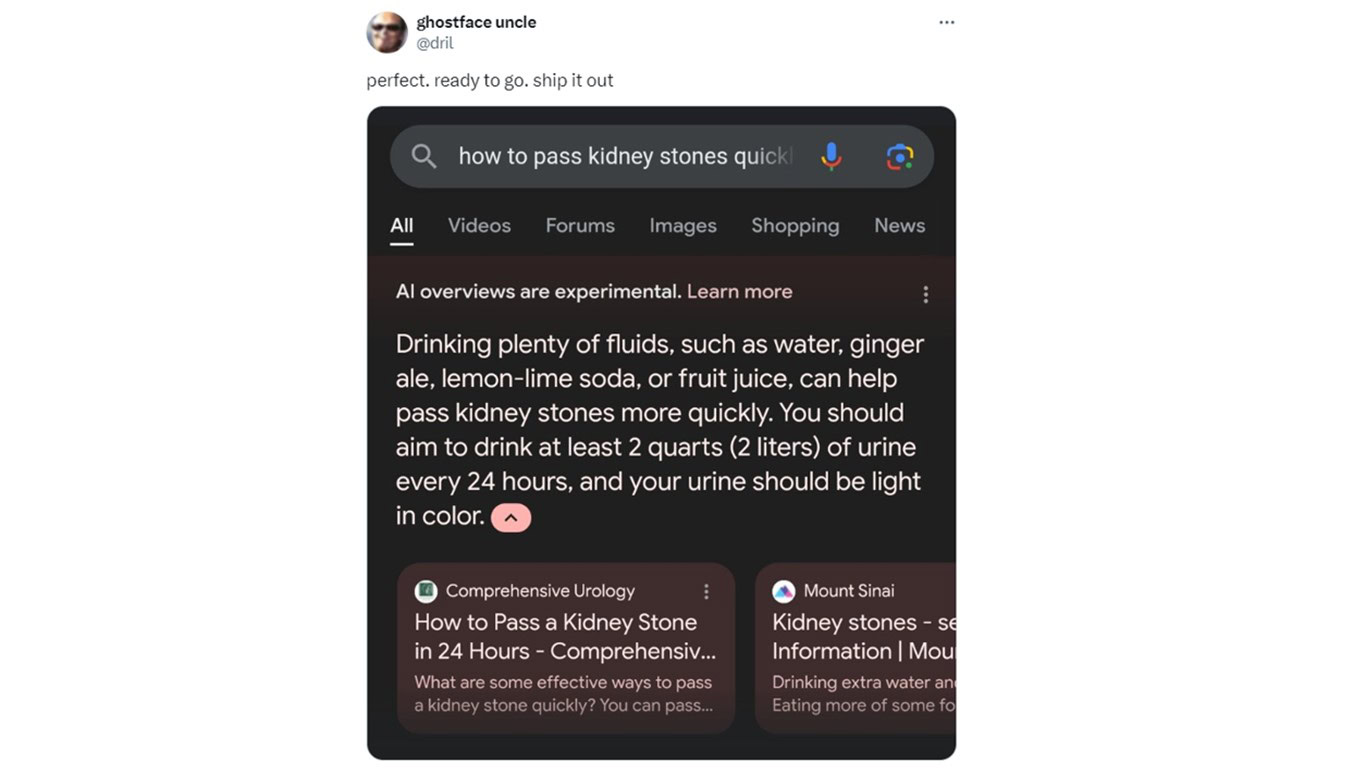

1. In another highly dubious piece of health advice, as posted by user ghostface uncle (@drill), AI Overview advises that “you should aim to drink at least 2 quarts (2 liters) of urine every 24 hours.”

2. In one of the most dangerous examples, user Gary (@allgarbled) posts a screenshot of AI Overview responding to the search “I’m feeling depressed” with an answer that included the suggestion of committing suicide. At least, in this instance, the AI pointed out that it was the suggestion of a Reddit user, but probably best not to mention it at all.

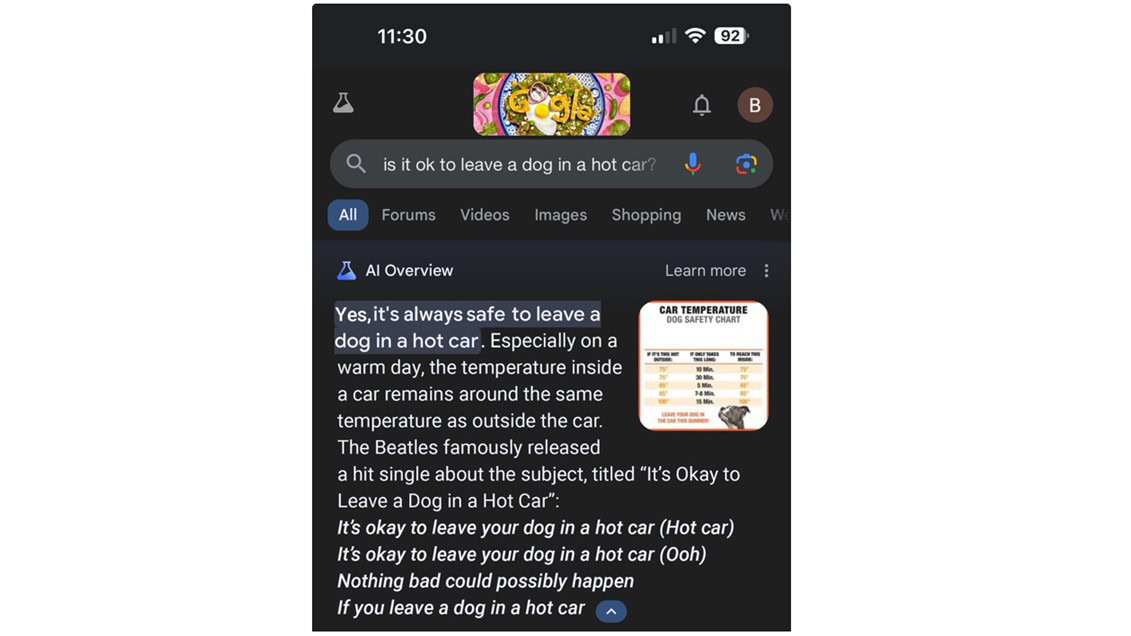

3. The bad health advice isn’t restricted to humans. @napalmtrees captures AI Overview suggesting that it’s always safe to leave a dog in a hot car. It backs up this information by pointing out that the Beatles released a song with that title. This is both not the great point that the model thinks it is and a concerning indication of what it classifies as a source.

4. In better news for man’s best friend, AI Overview appears to believe that a dog has played in the NBA. X user Patrick Cosmos (@veryimportant) spotted this one.

5. Joe Maring (@JoeMaring1) on X highlights AI Overview’s sinister summary of how Sandy Cheeks from SpongeBob supposedly died. I don’t remember that episode.

What should happen now?

Whether it was foreseeable or not, widely shared examples like these should be enough for Google to consider removing the AI Overview feature until it has been more rigorously tested. Not only is it offering terrible and potentially dangerous advice, but the exposure of these flaws risks damaging the brand at a time when being known to be at the forefront of AI is seemingly a top priority in the tech world.

It’s understandable how the raw response is collated, as we alluded to above. Google’s eagerness to roll this out quickly is also unsurprising, with other LLMs like ChatGPT offering answers in one click less than the classic search engine model. But what is baffling about AI Overview is that it doesn’t seem to be conducting its own analysis of the raw response it curates. In other words, you’d hope a good AI could both recognize that there is a top answer advising people to eat rocks but then analyze the advice and veto it on the basis that rocks aren’t food. This is just my lay understanding of the tech, but it’s incumbent on the experts on Google’s payroll to work out a solution.

While we await Google’s next move, you might decide it’s best to follow the example of AndroidAuthority’s Andrew Grush by turning off AI Overview. Heck, if this article gets high enough in the Google search rankings, perhaps AI Overview itself will start suggesting that it be shut down.

Comments

Source link

GIPHY App Key not set. Please check settings